Faculty of Engineering > Department of Computer Science >

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Research We are active in theoretical, social and practical aspects of robotics research. We contribute to the domains of probabilistic state estimaton, planning, model selection, and robot learning. We are interested in the societal impact and the cultural embedding of robotics as well as applications of robots and autonomous systems.

Human Perception and Tracking The ability to perceive humans is a key component for many robots, interactive systems and intelligent vehicles. We focus on the perception of humans in 2D and 3D range data as well as RGB-D data as we believe that this sensory modality is particularly suited for this task in terms of accuracy and robustness with respect to ambient conditions. People Detection and Tracking under Social Constraints in 2D, 3D and RGB-D Data Range finders such as Lidar or Radar are popular sensors for their robustness and accuracy. In our line of work on range-based people detection and tracking, we exploit the peculiarity of depth information, also in combination with image data, to detect, track and analyze humans and their behavior. Concretely, in depth data, objects such as people can be characterized by geometric and statistical properties at multiple scales. Exploiting such properties, we develop supervised and unsupervised, featureless and feature-based approaches to detect humans in 2D, 3D, and RGB-D data. In doing so, we were the first to propose principled detection approaches for people in all these data types. For tracking we use and extend the multi-hypothesis tracking approach, a sound probabilitic technique to integrate data association decisions over time. It generates and rates different explanations of the state of the world called hypotheses. We extend this approach to include learned place-dependent priors on human behavior and model-based knowledge from social science that introduce social context in tracking. The socially informed system outperforms the regular tracking approach in all our experiments. Related Publications (Selection) Spinello L., Arras K.O., "People Detection in RGB-D Data", IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS'11), San Francisco, USA, 2011. pdf | bib | data sets Luber M., Spinello L., Arras K.O., "People Tracking in RGB-D Data With Online-Boosted Target Models", IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS'11), San Francisco, USA, 2011. pdf | bib | data sets Luber M., Tipaldi G.D., Arras K.O., "Place-Dependent People Tracking", International Journal of Robotics Research (IJRR), vol. 30, no. 3, March 2011. pdf | bib | data sets Spinello L., Luber M., Arras K.O., "Tracking People in 3D Using a Bottom-Up Top-Down Detector", IEEE International Conference on Robotics and Automation (ICRA'11), Shanghai, China, 2011. pdf | bib | data sets Luber M., Stork J.A., Tipaldi G.D., Arras K.O., "People Tracking with Human Motion Predictions from Social Forces", IEEE International Conference on Robotics and Automation (ICRA'10), Anchorage, USA, 2010. pdf | bib Arras K.O., Martinez-Mozos O. (eds.), "Special issue on People Detection and Tracking", International Journal of Social Robotics, vol. 2, nr. 1, March 2010. read online (SpringerLink) | bib Videos

People detection in RGB-D data [YouTube link] Multi-Model Hypothesis Tracking of Groups of People People in densely populated environments typically form groups that split and merge. In this work we track groups of people so as to reflect this formation process and gain efficiency in situations where maintaining the state of individual people would be intractable. We pose the group tracking problem as a recursive multi-hypothesis model selection problem in which we hypothesize over both, the partitioning of tracks into groups (models) and the association of observations to tracks (assignments). Model hypotheses that include split, merge and continuation events are first generated in a data-driven manner and then validated by means of the assignment probabilities conditioned on the respective model. Experiments with a stationary and a moving platform show that, in populated environments, tracking groups is clearly more efficient than tracking people separately. The system runs in real time on a typical desktop computer. Related Publications Lau B., Arras K.O., Burgard W., "Multi-Model Hypothesis Group Tracking and Group Size Estimation", International Journal of Social Robotics, vol. 2, nr. 1, March 2010. pdf | bib | data sets and more information Lau B., Arras K.O., Burgard W., "Tracking Groups of People with a Multi-Model Hypothesis Tracker", IEEE International Conference on Robotics and Automation (ICRA'09), Kobe, Japan, 2009. pdf | bib | data sets and more information Videos Video [.mpeg, 14MB]

Unsupervised Learning and Classification of Dynamic Objects Robots in the real world share their environment with many dynamic objects such as humans, animals, vehicles, or other robots. The ability to recognize, track and classify such objects is of fundamental importance. As the variability of dynamic objects is large in general, it is hard to predefine suitable models for their appearance and dynamics. In this work, we present an unsupervised learning approach to this model-building problem. We describe an exemplar-based model for representing the time-varying appearance of objects in planar laser scans as well as a clustering procedure that builds a set of object classes from given training sequences. Extensive experiments with real data demonstrate that our system is able to autonomously learn useful models for, e.g., pedestrians, skaters, cyclists without being provided with external class information. Related Publications Luber M., Arras K.O., Plagemann C., Burgard W., "Classifying Dynamic Objects: An Unsupervised Learning Approach", Autonomous Robots, Vol. 26, Nr. 2-3, 2009. pdf | bib Luber M., Arras K.O., Plagemann C., Burgard W., "Classifying Dynamic Objects: An Unsupervised Learning Approach", Robotics: Science and Systems (RSS'08), Zurich, Switzerland, 2008. pdf | bib Videos

Tracks of different dynamic objects [.avi, 6.7MB]

Socially-Aware Task and Motion Planning

As robots enter environments that they share with people, human-aware planning and interaction become key tasks to be addressed. For doing so, robots need to reason about the places and times when and where humans are engaged into which activity. This knowledge is fundamental for robots to smoothly blend their motions, tasks and schedules into the behaviors, workflows and routines of humans. We believe that this ability is key in the attempt to build socially acceptable robots for many domestic and service applications. In our research, we address this issue by learning a nonhomogenous spatial Poisson process whose rate function encodes the occurrence probability of human activities in space and time. We then study two novel problems for human robot interaction in social environments. The first one is the maximum encounter probability planning problem, where a robot aims to find the path along which the probability of encountering a person is maximized. The second one is the minimum interference coverage problem, where a robot performs a coverage task in a socially compatible way by reducing the hindrance or annoyance caused to people. An example is a noisy vacuum robot that has to cover the whole apartment having learned that at lunch time the kitchen is a bad place to clean (see picture). Related Publications Tipaldi G.D., Arras K.O., "Please do not disturb! Minimum Interference Coverage for Social Robots", IEEE/RSJ Int. Conference on Intelligent Robots and Systems (IROS'11), San Francisco, USA, 2011. pdf | bib Tipaldi G.D., Arras K.O., "Planning Problems for Social Robots", International Conference on Automated Planning and Scheduling (ICAPS'11), Freiburg, Germany, 2011. pdf | bib Tipaldi G.D., Arras K.O., "I Want my Coffee Hot! Learning to Find People Under Spatio-Temporal Constraints", IEEE International Conference on Robotics and Automation (ICRA'11), Shanghai, China, 2011. pdf | bib Mueller J., Stachniss C., Arras K.O., Burgard W., "Socially Inspired Motion Planning for Mobile Robots in Populated Environments", Cognitive Systems 2008, Cognitive Systems Monographs, Springer, 2010. pdf | bib

Simultaneous Localization and Mapping The SLAM problem, that is, building a map while simultaneously using that map to self-localize, is considered one of the key challenges in robotics. Our contributions are a novel particularly robust SLAM front-end using generalized features and a consistent method to probabilistically represent and track the extension information of geometric features such as lines.

FLIRT (Fast Laser Interest Region Transform) FLIRT interest regions are generalized landmarks in 2D range data for robot localization and SLAM. The approach has been recently proposed as a novel data association front-end for particularly challenging environments with little computational costs. While most approaches to robot navigation based on 2D range data still fail in densely populated environments or open spaces with very sparse readings, FLIRT features unite the advantages of classical feature-based navigation with the generality of scan-based raw data techniques. Applied on typical navigation problems such as incremental mapping, localization and SLAM, the method is on par with the state of the art in terms of accuracy and extends current research in terms of generality and robustness. FLIRT features also have a great potential for an unified treatment of range and image data. The C++ FLIRT code is open-source. See the FLIRTlib link for documentation and downloads. Related Publications Tipaldi G.D., Braun M., Arras K.O., "FLIRT: Interest Regions for 2D Range Data with Applications to Robot Navigation", 12th Int. Symposium on Experimental Robotics (ISER'10), New Delhi, India, 2010. pdf | bib Tipaldi G.D., Arras K.O., "FLIRT - Interest Regions for 2D Range Data", Proc. IEEE International Conference on Robotics and Automation (ICRA'10), Alaska, USA, 2010. pdf | bib Videos Global robot localization with FLIRT features [YouTube link]

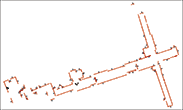

Line-Based SLAM SLAM with non-point features, that is features with a physical extension has received litte attention in the past, despite its relevance for indoor environments. One of reasons is the necessity to cope with the representation problem for uncertain geometric entities. This is the question of how to represent such features so that their parameter estimates remain consistent during the SLAM process. Another challenge with non-point features, is the question of how to maintain and update the extension information over the estimation cycle. To address these issues, we first define multi-segment lines as a new feature type for indoor environments that represent an arbitrary number of finite-length segments on the same supporting line. We derive expressions for location-dependent and location-independent geometric constraints of multi-segment lines, and we extend the multi-segment lines concept to general features of physical extension using the SPmodel, a representation approach for uncertain geometric entities. As a result, maps with multi-segment lines are much more compact and consistent in their feature representation than maps with regular segments. As lines give excellent pose orientation estimates, we found that large maps can be built even with simple data association strategies. Related Publications Wulf O., Arras K.O., Christensen H.I., Wagner B., "2D Mapping of Cluttered Indoor Environments by Means of 3D Perception", IEEE International Conference on Robotics and Automation (ICRA'04), New Orleans, USA, 2004. pdf | bib Arras K.O., "Chapter 7: SLAM with Relative Features", in Feature-Based Mobile Robot Navigation in Known and Unknown Environments,, Thèse doctorale Nr. 2765, Swiss Federal Institute of Technology Lausanne (EPFL), June 2003. pdf | bib Martinelli A., Tapus A., Arras K.O., Siegwart R., "Representations and Maps for Real World Navigation", 11th International Symposium of Robotics Research (ISRR'03), Siena, Italy, 2003. bib Wullschleger F.H., Arras K.O., Vestli S.J., "A Flexible Exploration Framework for Map Building", 3rd European Workshop on Advanced Mobile Robots (Eurobot'99), Zurich, Switzerland, Sept. 6-9, 1999. pdf | bib Videos

Bakery data set 1 (raw data) [ani gif, 0.5MB] | Line-based map 1 [ani gif, 200kB]

Robot Localization If efficiency, robustness and accuracy is needed, the feature-based approach to robot localization is still a superb choice. It allows for elegant solutions to both localization problems, pose tracking and global localization. The results of our research in this area has been successfully employed in a large-scale mass exhibition project with over 3,300 km travel distance. The software from this project has been licensed by several companies.

Global EKF Localization The first one to globally localize a robot, that is, to estimate its pose in a known map without prior knowledge, was Drumheller in 1987. Our research is along the same line of work that relies on a constraint-based search in the interpretation tree. Concretely, we introduce a visibility constraint and a new multi-segment line feature for which we derive expressions for its geometric constraints. Multi-segment lines have particularly strong constraints that help to keep the number of location hypotheses small. For estimation, we pursue a maximum-likelihood multi-hypothesis approach using a mixture of Kalman filters, that, opposed to particle filter approaches, produces exactly as many hypotheses as needed to represent the current level of ambiguity. If, for instance, the robot is uniquely localized, only a single hypothesis needs to be maintained. Once the robot is localized, we further propose a novel multi-hypothesis tracking algorithm that generates location hypotheses locally, bounding the complexity of the constraint-based search to a locality around the hypothesis. As an example result, we were able to globally localize a robot in a highly dynamic mass exhibition environment, within several tens to hundreds of milliseconds on an embedded CPU with a typical positioning accuracy of 1 cm. Related Publications Arras K.O., Castellanos J.A., Schilt M., Siegwart R., "Feature-Based Multi-Hypothesis Localization and Tracking Using Geometric Constraints," Robotics and Autonomous Systems Journal, vol. 44, no. 1, 2003. pdf | bib Arras K.O., "Chapter 5: Global EKF Localization", in Feature-Based Mobile Robot Navigation in Known and Unknown Environments, Chapter 5, Thèse doctorale Nr. 2765, Swiss Federal Institute of Technology Lausanne (EPFL), June 2003. pdf | bib Arras K.O., Philippsen R., Tomatis N., de Battista M., Schilt M., Siegwart R., "A Navigation Framework for Multiple Mobile Robots and its Application at the Expo.02 Exhibition," IEEE International Conference on Robotics and Automation (ICRA'03), Taipei, Taiwan, 2003. pdf | bib Arras K.O., Castellanos J.A., Siegwart R., "Feature-Based Multi-Hypothesis Localization and Tracking for Mobile Robots Using Geometric Constraints," IEEE International Conference on Robotics and Automation (ICRA'02), Washington DC, USA, 2002. pdf | bib Videos Real-time multi-hypothesis localization and tracking, Run 1 [ani gif, 200kB] | Run 2 [ani gif, 0.5MB]

High-Precision EKF Pose Tracking In our research on Kalman-filter based pose tracking, we worked with different features (lines, segments, beacons, vertical edges) and sensors (laser, vision, laser+vision). For laser data, we presented a novel linear-time line extraction method and derived closed-form expressions for the non-linear regression problem of fitting a line to points in polar coordinates minimizing perpendicular errors from the points onto the line. While the basic principle of Kalman filter-based pose tracking with geometric features was demonstrated in the late eighties, we addressed a number of theoretical and practical issues to make it a mature approach that withstands application-like conditions. This includes physically well-grounded error models for all involved sensors (odometry, laser, vision) and probabilistic extraction algorithms that propagate errors on the raw data level onto the feature parameter level. For the purpose of smooth, continuous multi-sensor localization during motion in real time (called on-the-fly localization), we further addressed the problems of proper sensor data registration using high-resolution timestamps, and, for the laser sensor, compensating the deformation that the vehicle motion imposes on laser scans. The sound approach to error modeling and propagation in combination with our techniques for on-the-fly localization, led to smooth and very precise pose estimates. In extensive experiments over several kilometers in uncontrolled, application-like conditions with different robots and environments, we have achieved a localization cycle time far below 100 ms on embedded PowerPC hardware, and a subcentimeter positioning accuracy Related Publications Arras K.O., Tomatis N., Jensen B., Siegwart R., "Multisensor On-the-Fly Localization: Precision and Reliability for Applications," Robotics and Autonomous Systems, vol. 34, issue 2-3, pp. 131143, February 2001. pdf | bib Arras K.O., Tomatis N., Siegwart R., "Multisensor On-the-Fly Localization Using Laser and Vision", IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS'00), Takamatsu, Japan, 2000. pdf | bib Arras K.O., Vestli S.J., "Hybrid, high-precision localisation for the mail distributing mobile robot system MOPS", IEEE International Conference on Robotics and Automation (ICRA'98), Leuven, Belgium, 1998. pdf | bib Arras K.O., Siegwart R., "Feature Extraction and Scene Interpretation for Map-Based Navigation and Map Building", Proceedings of SPIE, vol. 3210, Mobile Robotics XII, pp. 42-53, Pittsburgh, USA, 1997. pdf | bib Videos On-the-fly localization of Pygmalion with the notorious Acuity laser range finder [.mov, 18.7MB]

Do We Want To Share Our Life With Robots? A 2000 People Survey

A new generation of robots is entering our daily life as personal aids, robot companions, or care-takers for the elderly. They share our physical and emotional space and will be equipped with social skills, personality and high degrees of autonomy. In light of this development, it is high time to explore and analyze the public opinion on robotics in general and personal robots in particular. We have carried out a large-scale survey with over 2000 participants on the question if people want to share their life with a robot. There is good news for the robotics community: the general attitude towards potential robotic coworkers and flatmates is positive while in some points surprising. We investigate the answering behavior of different age, gender and language groups, find correlations in the data, discuss interpretations, speculate about the answers and finally conclude: Whom are we building robots for and what should they be like? Related Publications Arras K.O., Cerqui D., "Do we want to share our lives and bodies with robots? A 2000 people survey", Technical Report Nr. 0605-001, Autonomous Systems Lab, Swiss Federal Institute of Technology Lausanne (EPFL), June 2005. pdf | bib Cerqui D., Arras K.O., "Human Beings and Robots: Towards a Symbiosis? A 2000 People Survey", International Conference on Socio Political Informatics and Cybernetics (PISTA'03), Orlando, Florida, USA, 2003. pdf | bib

Robots in Exhibitions and Public Spaces The number of robots that are deployed in museums, exhibitions and public places is steadily growing. They mark the emergence of a new application domain of robots that pose for both, the researcher and the exhibition maker, a number of new and fascinating challenges.

Expo.02 Robotics Exhibition The Robotics pavilion at the Swiss National Exhibition Expo.02 is to this day the biggest deployment of robots in a public space. Eleven fully autonomous robots were interacting with almost 700,000 visitors during a five months period, seven days a week, twelve hours per day. Their tasks included tour guiding, picture taking and entertainment. The research challenges ranged from robust multi-hypothesis localization in highly populated environments, smooth yet fast obstacle avoidance and path planning, multi-robot coordination, multi-modal human-robot interaction, visitor flow management to robot design and system integration. With this large-scale project, we have demonstrated that the deployment of autonomous, freely navigating robots in a mass exhibition is feasible. Related Publications Siegwart R., Arras K.O., Bouabdallah S., Burnier D., Froidevaux G., Greppin X., Jensen B., Lorotte A., Mayor L., Meisser M., Philippsen R., Piguet R., Ramel G., Terrien G., Tomatis N., "Robox at Expo.02: A Large-Scale Installation of Personal Robots ", Robotics and Autonomous Systems, vol. 42, issue 3-4, pp. 203-222, March 2003. pdf | bib Arras K.O., Philippsen R., Tomatis N., de Battista M., Schilt M., Siegwart R., "A Navigation Framework for Multiple Mobile Robots and its Application at the Expo.02 Exhibition," IEEE International Conference on Robotics and Automation (ICRA'03), Taipei, Taiwan, 2003. pdf | bib Tomatis N., Terrien G., Piguet R., Burnier D., Bouabdallah S., Arras K.O., Siegwart R., "Designing a Secure and Robust Mobile Interacting Robot for the Long Term," IEEE International Conference on Robotics and Automation (ICRA'03), Taipei, Taiwan, 2003. pdf | bib Arras K.O., Tomatis N., Siegwart R., "Robox, a Remarkable Mobile Robot for the Real World," Experimental Robotics VIII, Siciliano B. and Dario P. (eds.), Advanced Robotics Series, Springer, 2003. pdf | bib Videos

Robotics @ Expo.02 [YouTube link] |

Scenes from Expo.02 [.mov, 5.7 MB]

IROS 2002 Workshop on Robots in Exhibitions This full-day workshop focuses on autonomous, socially interactive robots deployed in a public, exhibition-like space. It brings together robotics researchers, practitioners and end-users from diverse backgrounds to discuss past and ongoing projects, recent developments and prospects for the future. The proceedings (see below) contain 14 papers that give a comprehensive overview of the state-of-the-art. More information: Workshop homepage Related Publications Arras K.O., Burgard W. (eds.), "Robots in Exhibitions", IROS 2002 Workshop Proceedings, Lausanne, Switzerland, 2002. pdf [11 MB] | bib

Obstacle Avoidance

In our research on obstacle avoidance we focus on a combination of sensor-based, reactive methods with local path planning. The former ensures collision freeness under constraints from geometry, kinematics and dynamics while the latter avoids local minima. Our approaches account for polygonal robot shapes where we derive closed-form expressions for the distance to collision problem, avoiding the use of look-up tables. All our approaches have been tested in simulation and on real robots with different shapes, achieving a cycle time of 10 Hz under full load of the embedded CPU. During two long-term experiments, one over 5 km, one over 3,300 km travel distance, the methods demonstrated impressively their performance. Related Publications Arras K.O., Persson J., Tomatis N., Siegwart R., "Real-Time Obstacle Avoidance For Polygonal Robots With A Reduced Dynamic Window," IEEE International Conference on Robotics and Automation (ICRA'02), Washington DC, USA, 2002. pdf | bib Arras K.O., Philippsen R., Tomatis N., de Battista M., Schilt M., Siegwart R., "A Navigation Framework for Multiple Mobile Robots and its Application at the Expo.02 Exhibition," IEEE International Conference on Robotics and Automation (ICRA'03), Taipei, Taiwan, 2003. pdf | bib Videos

On-the-fly replanning around U-shaped obstacle [.mp4, 5.1MB]

Robot Design and Integration

Robotics is the science that releases machines into the real world full of noise and unpredictable events. This is why extensive experimentation is an integral part of our research practice to validate if a theory withstands these conditions. However, when working with real robots, one faces limitations of the embedded CPU, real-time constraints and system integration aspects. Most commercially available robot platforms and operating systems simply do not meet real-world requirements in the above sense. For this reason, we design platforms by oursevles. We have built several robotic platforms that were used in research and in applications over thousands of kilometers. Our robots are designed as general-purpose multi-axes systems with standard hardware components (VME, cPCI, IP I/O peripherals etc.) made for fully autonomous operation (no uplinks at all) under harsh environmental conditions. All robots run the hard real-time operating system XO/2. This powerful (though exotic) choice allows us, for instance, to run odometry as a periodic real-time task at 1 kHz with timestamps in a resolution of 100 µs. Related Publications Brega R., Tomatis N., Arras K.O., "The Need for Autonomy and Real-Time in Mobile Robotics: A Case Study of XO/2 and Pygmalion", IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS'00), Takamatsu, Japan, 2000. pdf | bib Tomatis N., Terrien G., Piguet R., Burnier D., Bouabdallah S., Arras K.O., Siegwart R., "Designing a Secure and Robust Mobile Interacting Robot for the Long Term," IEEE International Conference on Robotics and Automation (ICRA'03), Taipei, Taiwan, 2003. pdf | bib Siegwart R., Arras K.O., Jensen B., Philippsen R., Tomatis N., "Design, Implementation and Exploitation of a New Fully Autonomous Tour Guide Robot," 1st International Workshop on Advances in Service Robotics (ASER'03), Bardolino, Italy, 2003. pdf | bib Tomatis N., Brega R., Arras K.O., Jensen B., Moreau B., Persson J., Siegwart R., "A Complex Mechatronic System: from Design to Application," IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM'01), Como, Italy, 2001. bib

|

||||||||||||||||||||||||||